It’s been about four months since I last wrote. Things still have been a bit turbulent; nothing too stressful, but there has been a lot that has changed.

For one, I’ve traveled back up home, and began my job hunt back in Virginia. This was back in March when I came back for good (for the time being). I began applying to whatever role was within an hour’s drive and seemed like something that I could do, even if I didn’t meet most of the job requirements or have the professional experience to do so.

No luck, but that was expected.

a free training opportunity

I did find one opportunity though; free technical training for low-voltage technologies, over the course of five weeks. This past Friday, I finished that course, and walked away with hands-on experience with copper cabling, which was something that I had only looked over when I studied for the Network+ certification about two years ago. This course was by Per Scholas.

My fellow cohort members were lovely and seemed to like the class too. The latter part of the class was all hands-on, and we created Ethernet cables, set up a LAN with Cisco switches and routers (mounted on the rack!) and did troubleshooting when things didn’t seem to work.

After hearing what the course entailed, you would think, “Well, couldn’t you just do that at home?”. I would say you probably could.

But you probably wouldn’t have access to all of the tools for free.

You probably wouldn’t have access to a teacher and mentor that helped you when you didn’t know what move you should make next.

You probably wouldn’t have been able to connect with others working towards that same goal, who were also looking for jobs within the tech industry.

All for free, and all you had to do was show up. We were able to connect with potential local employers, and we have a recruiter to help us navigate job opportunities.

I’m working with mine to see if I can find work sometime soon.

the final semester

As you might’ve read, I did not get around to working on the capstone in my previous term at WGU. The current term began on April 1.

I changed my mind a lot regarding what I really wanted to do for the capstone. I wanted to do something related to my work to help them out, but I realized that it would take a while to collect the data that I needed to carry out my idea.

After about two weeks of scrolling through Kaggle and other dataset websites, I found with pictures of wild edible plants, so I thought, “why not make a model that can recognize plants from pictures?”.

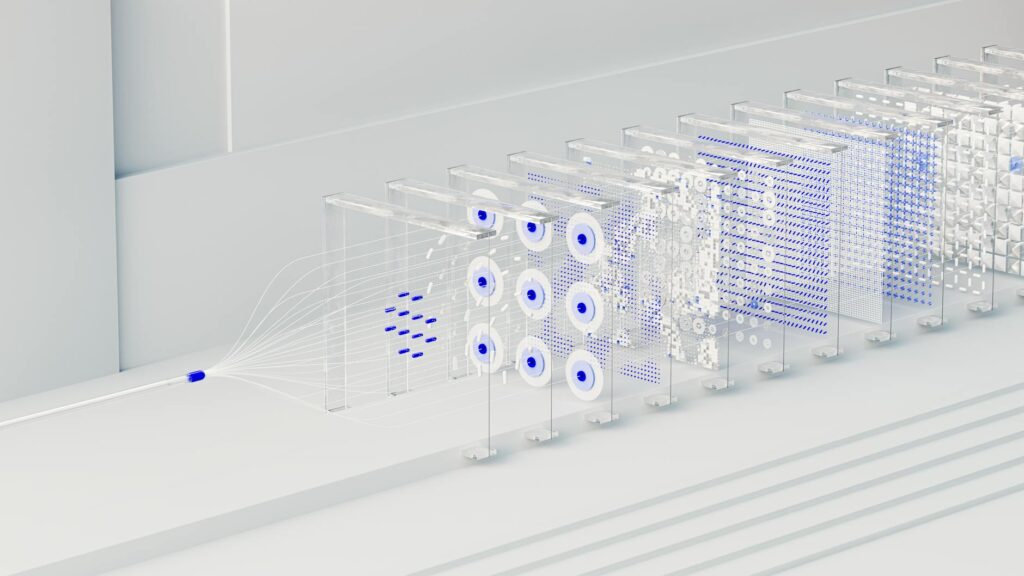

That sent me down a little journey of learning about convolutional neural networks to perform image classification.

There were a few resources that I used to learn more about them. I could’ve probably done it without building up the background knowledge, but I wanted to be able to understand what each line of code would mean.

- Artificial Intelligence: A Modern Approach, Fourth Edition by Stuart Russel and Peter Norvig.

- I mainly looked over Chapter 27, which entails computer vision. I will also look over Chapter 22 soon, which is about Deep Learning (as convnets are placed within this category).

- WGU used this textbook at the time of writing.

- But what is a convolution?, by 3Blue1Brown (on YouTube, link here)

- I had trouble earlier on trying to comprehend what a “kernel” and the act of “convolving” was, and I found this wonderful video that was a great visual explanation of the concept.

- Literacy Essentials: Core Concepts Convolutional Neural Network by Alex Schultz (via Pluralsight, link here)

- This gave me an overview on how convnets theoretically work.

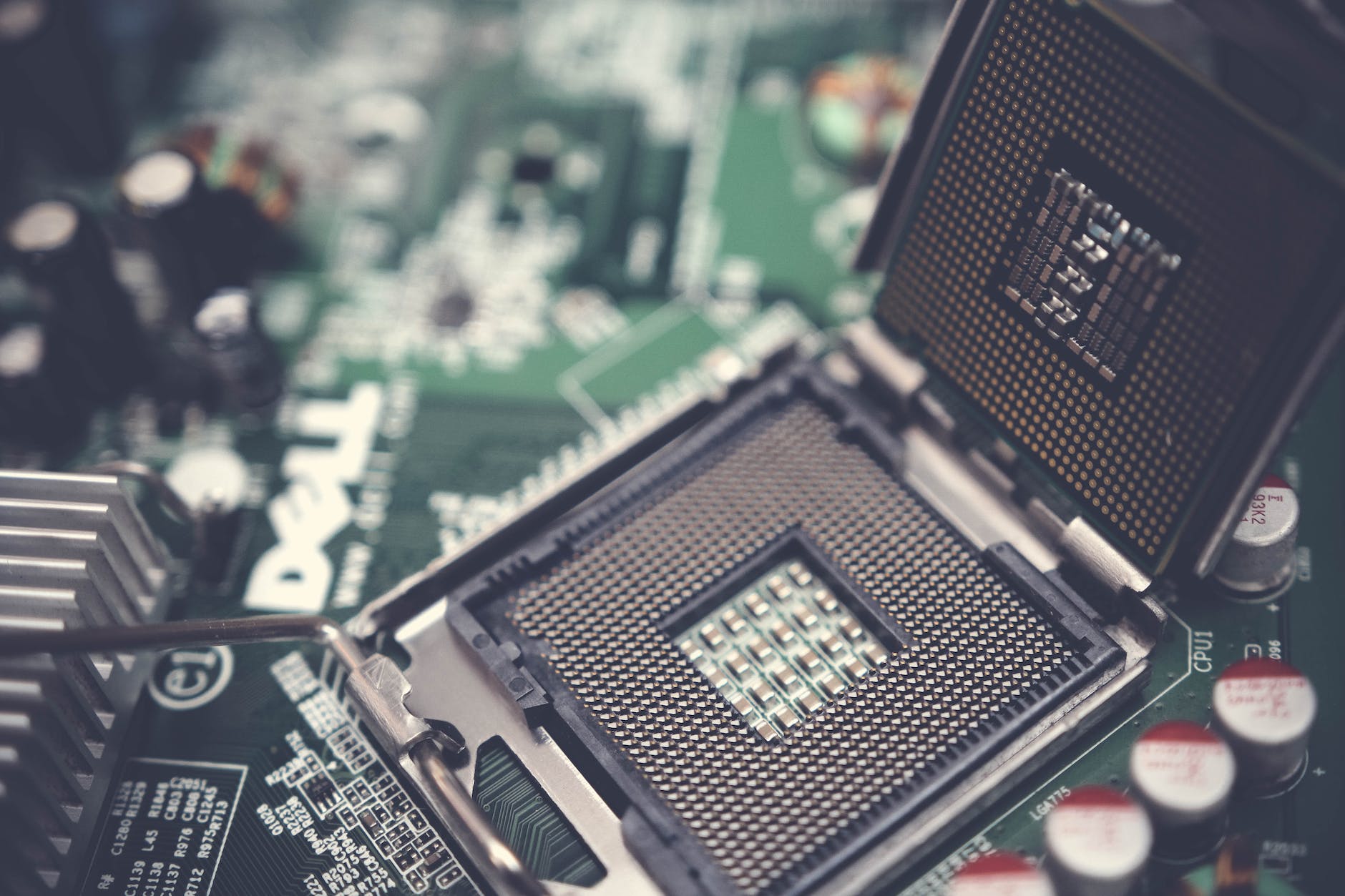

- Convolutional Neural Networks in Python with Keras, by Aditya Sharma (via Datacamp, link here)

- I wanted to follow along with a tutorial to try out the Python libraries used for machine learning, and saw this simple one using the MNIST fashion dataset. I completed it using Visual Studio Code. At the time, though, I didn’t know how or if the computer decided to use my GPU card, and didn’t know how to configure such setting.

- I ended up installing Nvidia CUDA and the associated CUDA Toolkit in order to have the model trained faster.

- Implement Image Recognition with a Convolutional Neural Network, by Pratheerth Padman (via Pluralsight, link here)

- I’m currently doing this one. I believed I had trouble getting Jupyter Notebook working with the graphics card, but in the end, I believe that it was more so the fact that I forgot that the dataset used here (x-ray images of patients with and pneumonia) had larger images and more layers than the tutorial I followed on Datacamp.

- It taught me how finicky the libraries can get when you have so many of them working together (and depending on each other). I spent a few hours one day trying to start a new Python environment from scratch, finding the correct version of Python to use and the associated libraries (like Tensorflow, Scikit Learn, Keras, and so on).

- I’m going to complete the last module after I return home (as I am on an overnight trip at the moment, away from my desktop computer) which involves optimizing the model for better performance and better fit on the data.

next steps

Since the low-voltage training has concluded, I’m planning on completing the model architecture/training/testing portion as soon as possible. I don’t believe that the accuracy of the model has to be extremely high.

However, I want to host the solution on the cloud or an online environment similar to Jupyter Notebook that can be configured to use a GPU. All the model will need is extra fine-tuning to work with a different dataset. But I would like to let the evaluator use an image that the model has not yet seen before in order to see how well the model can classify the plant that’s in the image.

The dataset that I’m using only has about thirty different plants, so I think I will need to implement some feature that has the person using the application choose from a pre-selected group of plant pictures instead of uploading their own, as the model would need to be trained even further to accommodate for a wider variety of plants.

I will try to keep you updated. 🙂